The End of the “Prompt Era”

For much of the generative AI boom, “being good at prompts” became shorthand for being good at AI. Teams compared prompt hacks, influencers sold prompt packs, and job posts even listed “prompt engineering” as a core skill.

RELATED: 7 Essential Tips to Master AI Prompt Engineering – a practical guide to getting better answers from today’s models (and a snapshot of why prompt engineering felt so important in the early GenAI wave).

But the ground is already shifting. Modern models are getting better at inferring intent, expanding vague instructions, and quietly rewriting prompts behind the scenes. At the same time, product interfaces are moving away from clever incantations and toward simple, natural language goals.

Think about your workday. Today, you might still type something like “Act as a market research expert and summarize these customer interviews.” In the near future, you’re more likely to say, “Prioritize what’s blocking customer renewals this quarter,” and an AI agent will automatically decide which tools to call, what data to pull, and how to present its findings. Your value won’t be in crafting the perfect wording; it will be in judging whether the agent’s reasoning, evidence, and recommendations actually make sense.

That shift is accelerated by enterprise adoption of AI at scale, the rise of agentic workflows that act across systems, and growing pressure for trustworthy, auditable, and autonomous AI solutions. As prompting is absorbed into the systems themselves, the scarce skill is no longer “How do I talk to the model?” but “How do I interpret what this model—or this network of agents—is doing, and keep it aligned with my goals and guardrails?”

In this article, we’ll explore why prompting is being automated away, how agentic AI changes the human role at work, and which interpretation and evaluation skills will matter most by 2026.

Prompting Is Being Automated Away

AI is now rewriting prompts internally

Modern AI systems already do a lot of “prompting” on their own. Under the hood, leading models:

- infer missing context from chat history, user profiles, and system messages

- expand short user inputs into richer internal prompts

- break work into substeps, decide when to call tools or APIs, and chain actions together

Tool use, function calling, retrieval-augmented generation (RAG), and long-context “memory” mean much of what used to be manual prompt engineering now lives in the orchestration layer or the model itself. In practice, you might type a short request, but the system builds a large, structured internal prompt you never see.

READ: Context is All You Need: Why RAG Matters – a deeper dive into how retrieval-augmented generation gives models the context they need without relying on ever-longer prompts.

You can already see this in tools like office copilots and enterprise assistants, which quietly augment vague instructions (“Draft a summary for leadership”) with relevant documents, past threads, and formatting guidance before generating an answer.

UX trends toward natural language and goal-setting

Interface design is shifting in the same direction. Instead of rewarding users who know clever hacks, most products now guide people toward natural language + clear goals:

- “Summarize key risks in these contracts for non-lawyers.”

- “Turn this sales call into a follow-up email and CRM update.”

- “Prioritize the top 5 blockers to renewal from this feedback.”

Sliders, toggles, templates, and drop-downs absorb what used to be long prompt boilerplates. The result: your mental model matters more than your ability to type “act as X” or “use the following chain-of-thought strategy.”

By 2027, Gartner predicts 80% of AI interactions will be context-driven, meaning the system infers user intent from context and natural language without needing prompt tweaks. In practice, this means less reliance on “hacky” prompt techniques and more on declarative instructions of what outcome you want. AI will clarify if needed (“Do you want to invite all team members?”) and then execute. The emphasis is on goal-oriented dialogue rather than perfect prompt syntax .

The rise of no-prompt interfaces

At the same time, we’re seeing the growth of “no-prompt” or “almost-no-prompt” interfaces:

- In-app copilots surface suggestions based on what you’re already doing—editing a document, reviewing a ticket queue, or exploring a dashboard—without you ever opening a separate chat window.

- Domain-specific assistants in tools for CRM, HR, finance, and education come pre-loaded with domain prompts, patterns, and guardrails. The end user rarely types more than a sentence.

- Task-specific agents run on top of enterprise apps, where the “prompt” is effectively the task definition and system configuration, not something a user types each time. Gartner, for example, predicts that a significant share of enterprise applications will embed task-specific AI agents by 2026, up from a small fraction today.

In all of these cases, the hard work isn’t typing a detailed prompt. It’s deciding if the system did the right thing, given the stakes and constraints.

Agentic AI Changes Everything

Agents don’t wait for instructions

Traditional chatbots wait for a single instruction and respond. Agentic AI can accept a goal and autonomously:

- decompose it into subtasks

- decide which tools, APIs, or workflows to call

- iterate based on intermediate results

- keep context across steps and over time

RELATED: How Chain-of-Thought Transforms Open-Source LLMs – explore how step-by-step reasoning powers more reliable agents and decision-making.

For example, if you tell an agent “Organize our customer support tickets and draft responses to the top issues,” the agent might plan tasks like:

- analyze all support tickets from the past week

- cluster them by issue type

- prioritize the top categories

- draft response templates for each category

- present a summary report

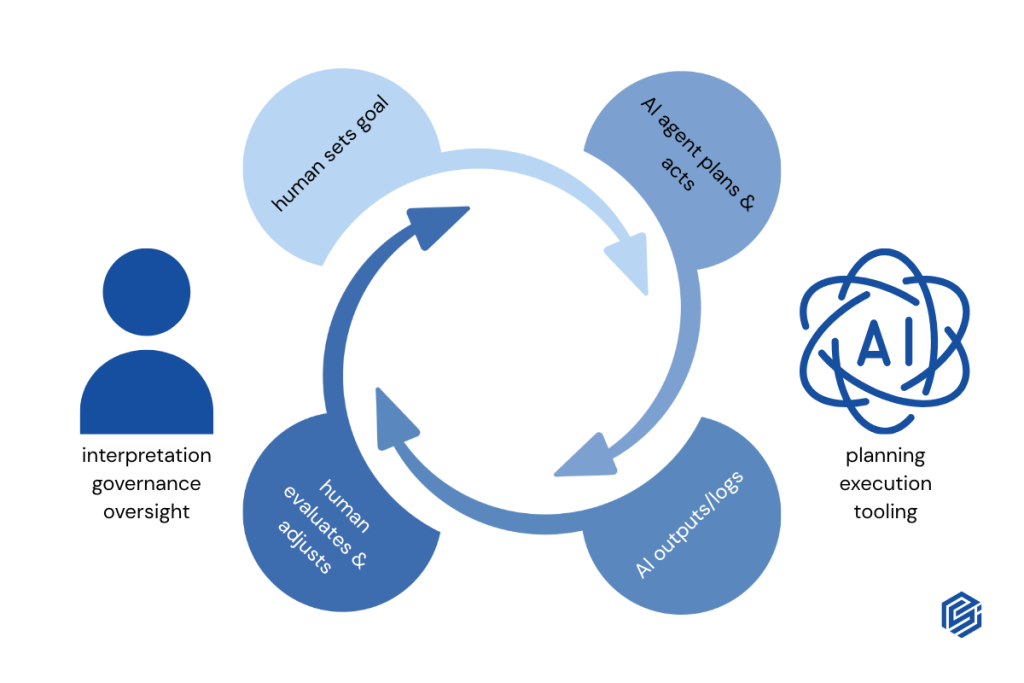

The user’s real job becomes supervision

As agents become more autonomous, your role shifts from instruction writer to system supervisor. Instead of crafting perfect prompts, you:

- set goals, constraints, and acceptable risk levels

- decide where AI can act independently vs. where humans must approve

- review samples of agent behavior and adjust policies or configurations

- monitor logs and dashboards for anomalies, drift, and edge cases

For instance, an agent might draft an email campaign autonomously; a marketer will then review those emails for tone and accuracy (interpret the AI’s choices) before approving. This supervisory role also means setting ethical guardrails and policies at the start (e.g. “Don’t use any personal data in the analysis” or “If unsure about a medical question, flag a human”).

As one industry piece noted about programmers adopting AI, only 43% fully trust AI outputs, so they act as supervisors – guiding and validating AI work rather than blindly accepting it. Across fields, professionals will spend more time interpreting AI plans and results and less time giving detailed instructions upfront.

Examples of agentic AI in action:

This shift is not theoretical as it’s already happening in various domains:

- Customer service routing agents

Agentic systems can triage incoming tickets, look up information across systems, propose fixes, and even apply simple resolutions. Humans step in for complex cases, adjust routing rules, and audit whether high-risk issues were handled correctly.

- Report-generation agents

Instead of analysts manually querying databases and building slides, an agent can pull data, run analyses, and draft a narrative report. A manager then sanity-checks the numbers, refines the story, and decides what goes to leadership.

READ: How Do You Know that Your AI Model is at Optimal Performance? – a guide to checking whether your models (and agents) are actually delivering the quality you expect.

- Workflow optimization agents

In operations or DevOps, agents watch logs, detect recurring issues, and suggest or execute remediations. Humans define policies, approve higher-risk actions, and keep an eye on dashboards to ensure nothing drifts out of bounds.

RELATED: 5 Steps to Effective AI Benchmarking That Actually Drive Results – practical steps for stress-testing AI systems before you trust them in production.

- Educational tutors and evaluators

Learning platforms use AI to generate questions, adapt difficulty, and give rapid feedback at scale. Teachers focus on interpreting patterns, validating AI feedback, and handling the human side of learning and motivation.

MORE: better-ed – PSI’s voice-enabled assessment platform that uses AI to check real understanding, not just copy-pasted text.

Why Interpretation Is Now the Essential AI Skill

Safety, trust, and governance depend on understanding AI’s behavior

Once AI starts making or influencing decisions, interpretability is no longer optional. Traditional accuracy metrics aren’t enough—you need to understand how systems behave over time and across scenarios. In practice, that means organizations need people who can:

- read logs, traces, and tool calls to see how an outcome was produced

- recognize when a system is overconfident or relying on weak signals

- separate harmless rough edges from systemic risks or bias patterns

Trust in AI comes from knowing why it produced an output. That’s why emerging governance guidelines, such as the EU AI Act, emphasize transparency and explainability—AI decisions should be interpretable and auditable by humans. Without that, issues like bias or misalignment remain hidden until they cause real-world damage.

Enterprises need validators, not just operators

Surveys of enterprise AI adoption show a consistent pattern: usage is up, but confidence in governed, scalable usage lags behind. McKinsey’s State of AI reports highlight widespread generative AI adoption, while analyses like MIT’s “GenAI Divide” show many projects failing to deliver outcomes when they’re deployed without proper integration and oversight. In regulated industries like finance, healthcare, law, or government, you can’t “ship and hope.”

This is creating demand for roles that didn’t exist in the prompt-engineering era—people who can validate and audit AI systems. They:

- stress-test systems before deployment

- certify them for specific use cases and risk levels

- periodically re-evaluate as data, models, and regulations change

For example, a bank using AI to approve loans needs staff who understand the model’s decision process enough to explain why a particular applicant was denied—and ensure it wasn’t due to illegal bias. In healthcare, if an AI agent assists in diagnosis or treatment planning, doctors (or AI risk managers) must verify that the reasoning is sound and doesn’t overlook critical factors.

RELATED: The Blueprint for Building AI Solutions: A Data Science Lifecycle – see how evaluation and governance fit into a full AI project lifecycle.

Individual careers will hinge on interpretive capacity

From a career perspective, tying your value to one model’s prompt syntax is risky. The ecosystem is moving toward agents, orchestration frameworks, and domain-specific assistants; interfaces will keep changing, but the need for humans who can interpret, challenge, and explain AI outcomes will not. People who can:

- ask the right clarifying questions of AI systems

- diagnose when an outcome is untrustworthy

- explain AI-driven decisions to stakeholders

…will be trusted with high-stakes projects, client-facing work, and leadership roles in AI-enabled organizations. Just as the “computer operator” role faded as computers became easier to use, the hype around “prompt engineer” will fade as AI becomes more self-directed. In its place, new roles are emerging—AI navigators, supervisors, conductors—who oversee fleets of AI agents, keep them aligned with objectives and ethical standards, and intervene when something looks off.

What Skills Will Matter in 2026 and Beyond?

If prompt engineering is becoming part of the infrastructure, what should individuals and teams actually build expertise in?

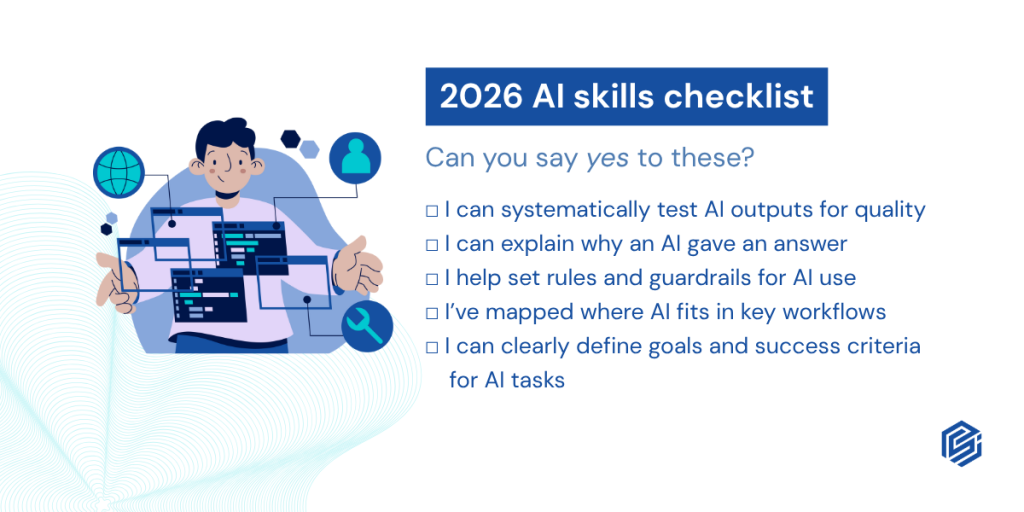

Here’s a 2026 AI Skills Checklist of the durable abilities that will outlast the prompt era:

- Evaluation & Model Verification

The ability to test AI outputs for correctness, completeness, and quality. This includes designing evaluation criteria, running scenario tests, spotting inaccuracies or unrealistic answers, and using tools (fact-checkers, metrics dashboards, sandbox environments) to judge performance.

Think of it as QA for AI. If an AI generates a financial summary, a skilled evaluator can verify the calculations, check that nothing material is hallucinated, and document what was tested. In regulated fields, this also means creating audit trails of what the AI did and verifying those logs. As AI handles more tasks, we’ll need people whose core job is to routinely check and certify the results.

- Reasoning Interpretation

The skill of understanding why the AI arrived at a given output. As more systems expose traces, tool calls, and chain-of-thought variants (internally or via logs), someone has to read and interpret them:

- where did the logic break?

- did the system misread the context or misapply a rule?

- was the right tool called, but with the wrong inputs or assumptions?

This also means basic familiarity with how modern AI reasons (for instance, what chain-of-thought is, or how an LLM might decompose a question). Being able to read reasoning traces or attention-style views—when available—makes you the person who can debug logic, give high-quality feedback to data scientists, or adjust system parameters to correct errors.

RELATED: Why Design Thinking is Essential in the Age of AI – how human-centered design keeps AI solutions useful, ethical, and grounded in real needs.

- Governance & Setting Constraints

In the future, many roles will involve being the person who configures the rules and guardrails for AI systems. This is a mix of policy, ethics, and technical configuration.

Skills here include understanding AI risk (bias, privacy, security issues), defining boundaries (what an AI should never do or say), and ensuring compliance with laws and internal ethics. For example, an AI product manager might specify that the AI must refrain from certain kinds of medical advice, or that strong content filters are in place for harassment.

People who can operationalize Responsible AI—translating principles into concrete constraints, review processes, and standard operating procedures—will be in demand. In short: they’re the ones keeping AI on the straight and narrow path.

- Workflow Integration

The ability to look at a business or creative process and decide which parts AI can do, which parts humans should do, and how they hand off to each other. This is about designing workflows where AI is a collaborator, not a bolt-on.

Key skills include:

- mapping end-to-end workflows and handoffs

- deciding which steps are human, which are AI, and which are hybrid

- setting up feedback loops so human corrections improve the system over time

For example, in a document drafting process: humans set objectives and review; AI handles initial drafting and data gathering. In customer support: AI answers common questions; humans handle complex cases, with a clear escalation path. Knowing how to slot AI into real-world operations—and re-engineer processes around that collaboration—is a valuable, portable skill.

- Problem Framing (and Prompt Simplification)

Interestingly, the lasting skill related to prompting is not about complexity, but clarity in defining problems. A Harvard Business Review piece argued that “problem formulation” is a more enduring skill than prompt formulation—and that’s exactly the point here.

Being able to take a vague goal and turn it into a well-scoped ask for an AI (or for a human team) is crucial. It’s less about clever prompt gimmicks and more about good communication. Instead of trying a dozen prompt tricks, you step back and clarify the goal: “We need a two-paragraph summary focusing on X and Y, in a neutral tone.” You articulate the problem in plain language and decide what success looks like.

This is model-agnostic: no matter how AI interfaces evolve, breaking down a problem and clearly specifying an outcome remains fundamental.

How PSI Prepares Teams for the Post-Prompt Era

At Predictive Systems Inc. (PSI), we’ve seen this shift up close with clients across education, legal, and enterprise settings. The bottleneck is rarely “we don’t know the right prompt” but it’s “we’re not sure how to evaluate, govern, and integrate AI safely.”

That’s why our PSI AI Academy programs and pilot projects focus less on prompt tricks and more on interpretive capability: reading AI reasoning traces, running evaluation scenarios, stress-testing agent behavior, and designing human-in-the-loop workflows. We treat AI as a powerful collaborator that still needs supervision, not as an oracle.

It ties back to our core belief: your data, your AI, your way. Interpretation and governance are what turn generic AI into systems that are truly yours: aligned with your data, your policies, and your context.

READ: PSI AI Academy – our training programs that focus on evaluation, governance, and building real-world AI supervisors and collaborators.

RELATED: Winning in the AI Market: Strategies Every Business Must Know – how startups and SMEs can compete using ethical, high-performing AI.

The Bottomline

The so-called “prompt era” of AI was never destined to last. It was a transitional phase where humans had to adapt to early AI quirks. Prompting is becoming a behind-the-scenes detail; interpretation is a lasting capability.

In a future filled with agentic AI systems that proactively make plans and take actions, the human role becomes even more pivotal. We won’t be the ones writing detailed instructions at every turn—we’ll be the ones guiding strategy, providing oversight, and ensuring the AI’s actions align with our goals and values. It’s like moving from being the driver of a single car (micromanaging every turn) to being air traffic control for a fleet of autonomous drones—a higher-level oversight role.

“Agents need human supervision” is a fundamental principle that’s emerging. No matter how advanced autonomy gets, there must be accountable humans in the loop or above the loop—people who understand the why behind decisions and who can intervene when needed. Those who develop interpretation and governance skills will be the leaders of AI-enhanced workplaces, bridging the gap between what AI can do and what businesses or societies should do.

As we approach 2026, it’s time to adjust our mindset. The future belongs to people who understand how AI thinks. They will be the translators, trustees, and trailblazers ensuring AI is used responsibly and effectively. In your own career and company, look for ways to shift from prompt-heavy work to interpretation-heavy work. Invest in understanding the models, not just playing with them.

When in doubt about where you add value in an AI world, focus on what AI can’t yet do well by itself. It can generate content, but it can’t judge whether that content is appropriate for your specific context—that’s you. It can plan actions, but it can’t guarantee the ethical or strategic soundness of those actions—that’s on you. It can give answers, but it’s up to you to ask why and make sense of them.

Prompt less. Interpret more. That’s how we make sure AI systems serve our goals—and why, by 2026, interpreting AI will matter far more than prompting it.

References:

- Neeley, Tsedal, et al. “Generative AI: Insights You Need from Harvard Business Review.” Harvard Business Review Press, 2023. (Excerpt on prompt engineering becoming obsolete)

- The Code Alchemist. “Prompt Engineering Will Be Obsolete in 2 Years — Here’s Why You Should Care.” Medium, May 24, 2025. (Discussion of AI understanding intent, auto-prompting, and context-driven interactions)

- Bayly, Erin & Bayly, Mike. “NZ’s two-tier AI economy; AI adoption playbook to fix it.” The AI Corner, Nov 9, 2025. (Citing McKinsey State of AI 2025 stats: 88% using AI, 23% scaling)

- Barber, Kris. “How AI Is Rewiring the Enterprise: Key Takeaways from McKinsey’s 2025 State of AI Report.” DunhamWeb, June 18, 2025. (Noting risk/governance gap – few firms mitigating AI risks)

- Ritchie, Dave. “Analytical AI: The Quiet Powerhouse in a Generative World.” Web Pro News, Nov 12, 2025. (Highlights agentic AI’s rise with 64% experimenting, 23% scaling; underscores need for analytical foundations)

- OpenAI. “Building Agents.” OpenAI Developer Guides, 2025. (Definition of AI agents and OpenAI’s tools for agents – models, tools, memory, orchestration)

- Hassabis, Demis & Kavukcuoglu, Koray. “Google introduces Gemini 2.0: our new AI model for the agentic era.” Google Blog, Dec 2024. (Announcement of Gemini’s agentic capabilities: planning, tool use, multimodal reasoning enabling autonomous experiences)

- ClickUp Blog. “How AI Is Reshaping Programmers’ Roles Faster Than You Think.” Nov 13, 2025. (Describes developers shifting from coding to “AI supervisor” roles; trust issues require humans in loop)

- Zhou, A. et al. “Hallucinations Not Always Prompt-Driven – Survey of LLM Hallucination Attribution.” Frontiers in AI, 2025. (Study finding many hallucination causes lie in model/data issues, not just user prompts)

- Hain, Moritz. “Interpretable Reasoning as a Regulatory Requirement.” Sapien.io blog, Nov 7, 2025. (Discusses regulators demanding audit trails of AI reasoning, need for full interpretability in enterprises)

- Lambert, Nathan. Interview with Ross Taylor (former Meta AI Research Lead). Interconnects, Aug 8, 2024. (Meta’s focus on LLM reasoning and agents – mentions role leading “reasoning team” and agent research at Meta)

Chang, Peter. “Deep Dive into AutoGPT: The Autonomous AI Revolutionizing the Game.” Medium, Apr 24, 2023. (Explains how AutoGPT breaks goals into sub-tasks and operates in a Plan–Act–Learn loop, illustrating autonomous agent behavior)